Contextual AI

Founded Year

2023Stage

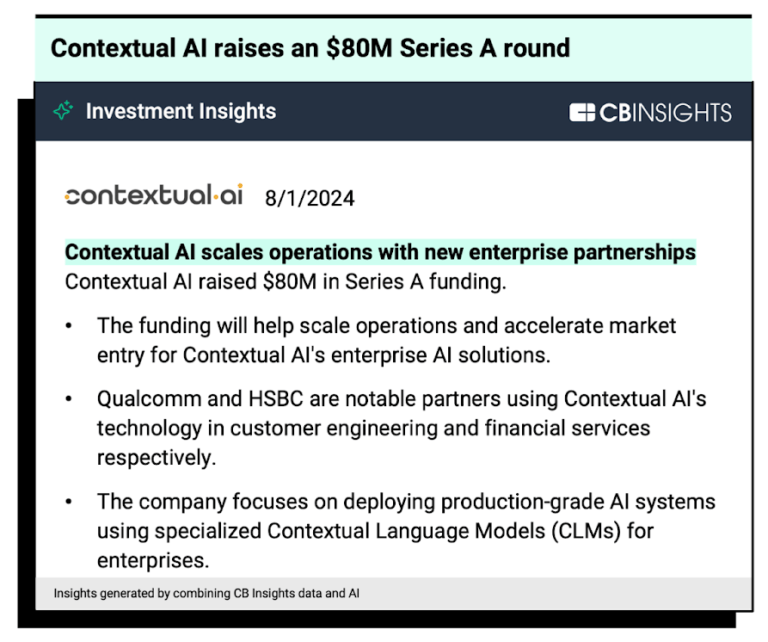

Series A | AliveTotal Raised

$100MLast Raised

$80M | 1 yr agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-6 points in the past 30 days

About Contextual AI

Contextual AI focuses on retrieval-augmented generation (RAG) in the artificial intelligence (AI) sector. The company provides a platform that allows enterprises to create RAG agents aimed at improving productivity in expert knowledge work. Contextual AI serves Fortune 500 companies, offering solutions for subject-matter experts. It was founded in 2023 and is based in Mountain View, California.

Loading...

Contextual AI's Products & Differentiators

Contextual AI Platform

The Contextual AI Platform is a full-featured and modular development platform for building enterprise AI apps that work on your data.

Loading...

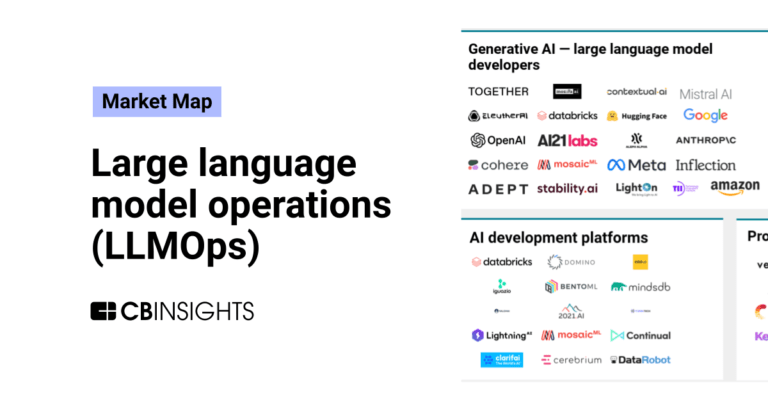

Research containing Contextual AI

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Contextual AI in 5 CB Insights research briefs, most recently on Sep 5, 2025.

Sep 5, 2025 report

Book of Scouting Reports: The AI Agent Tech Stack

Jul 14, 2023

The state of LLM developers in 6 chartsExpert Collections containing Contextual AI

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

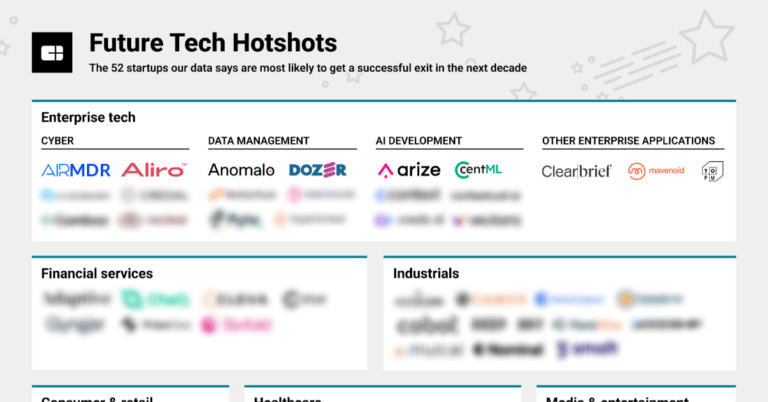

Contextual AI is included in 6 Expert Collections, including AI 100 (All Winners 2018-2025).

AI 100 (All Winners 2018-2025)

100 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

2,826 items

Companies working on generative AI applications and infrastructure.

Future Tech Hotshots

52 items

The 52 startups our data says are most likely to get a successful exit in the next decade

Artificial Intelligence

10,402 items

AI agents

376 items

Companies developing AI agent applications and agent-specific infrastructure. Includes pure-play emerging agent startups as well as companies building agent offerings with varying levels of autonomy. Not exhaustive.

Latest Contextual AI News

Jul 14, 2025

RAG Agents Platform: Build the Future of AI 🚀 February 29, 2024 The landscape of artificial intelligence is rapidly evolving, and a key innovation driving this progress is retrieval-augmented generation (RAG). Today, Douwe Kiela, CEO and cofounder of Contextual AI, shared insights into this transformative technology, detailing its origins, current challenges, and potential impact on AI applications. His discussion with Ryan and Ben sheds light on how RAG is addressing limitations of traditional large language models (LLMs). The Genesis of RAG: From Meta Research to Industry Adoption Douwe Kiela’s work at Meta played a pivotal role in the early development of RAG. Initially, LLMs, while impressive in their ability to generate human-like text, were often plagued by inaccuracies and a tendency to “hallucinate” – presenting fabricated information as fact. Kiela’s research focused on augmenting these models with the ability to retrieve information from external knowledge sources. This approach, now known as retrieval-augmented generation, allows LLMs to ground their responses in verifiable data, significantly reducing the risk of hallucinations. The core principle behind RAG is simple yet powerful: instead of relying solely on the knowledge encoded within its parameters during training, an LLM first consults a relevant database or knowledge base. This retrieved information is then incorporated into the prompt, providing the model with the necessary context to generate a more accurate and informed response. This process mirrors how humans often approach problem-solving – by first gathering relevant information before forming an opinion or making a decision. Addressing the Hallucination Problem in AI Hallucinations remain a significant hurdle in the deployment of LLMs, particularly in applications where accuracy is paramount. RAG offers a compelling solution by providing a mechanism for fact-checking and verification. By grounding responses in external knowledge, RAG minimizes the reliance on the model’s potentially flawed internal representations. However, Kiela emphasized that RAG is not a silver bullet. The quality of the retrieved information is crucial; if the knowledge source is biased or inaccurate, the generated response will likely reflect those flaws. Furthermore, the effectiveness of RAG depends on the ability to retrieve the right information. This requires sophisticated retrieval mechanisms that can accurately identify the most relevant documents or data points from a vast knowledge base. What strategies are being developed to improve the precision of information retrieval in RAG systems? And how can we ensure that RAG systems are robust against adversarial attacks designed to inject misinformation into the retrieval process? The Critical Role of Context Windows Another key aspect of RAG is the size of the context window – the amount of text that an LLM can process at once. Larger context windows allow models to consider more information when generating a response, potentially leading to more nuanced and accurate outputs. However, larger context windows also come with computational costs. Kiela discussed the trade-offs between context window size, computational efficiency, and performance. Recent advancements in LLM architecture are enabling the development of models with increasingly large context windows. This opens up new possibilities for RAG, allowing models to leverage even more extensive knowledge sources. Contextual AI is actively exploring techniques to optimize RAG performance within these expanded context windows, focusing on efficient retrieval and effective integration of retrieved information. Contextual AI is dedicated to building practical applications of RAG. Pro Tip: When evaluating RAG systems, focus not only on the accuracy of the generated responses but also on the efficiency of the retrieval process. A slow or unreliable retrieval mechanism can negate the benefits of RAG. The implications of RAG extend far beyond simply reducing hallucinations. It enables LLMs to access and utilize information that was not available during their initial training, allowing them to adapt to new knowledge and evolving circumstances. This is particularly important in dynamic fields such as finance, healthcare, and scientific research. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks provides a foundational understanding of the technology. Frequently Asked Questions About Retrieval-Augmented Generation What is retrieval-augmented generation (RAG)? RAG is a technique that enhances large language models by allowing them to retrieve information from external knowledge sources before generating a response, improving accuracy and reducing hallucinations. How does RAG help prevent AI hallucinations? By grounding responses in verifiable data from external sources, RAG minimizes the reliance on the model’s potentially flawed internal knowledge, thereby reducing the likelihood of generating false or misleading information. What is the significance of context windows in RAG? Larger context windows allow LLMs to consider more information when generating a response, potentially leading to more nuanced and accurate outputs, but also require more computational resources. What role did Douwe Kiela play in the development of RAG? Douwe Kiela’s research at Meta was instrumental in the early development of RAG, laying the foundation for its current widespread adoption and ongoing advancements. Is RAG a perfect solution to the hallucination problem? No, RAG is not a perfect solution. The quality of the retrieved information is crucial, and the system must be able to accurately identify the most relevant data from a vast knowledge base. The future of AI is inextricably linked to our ability to build systems that are not only intelligent but also reliable and trustworthy. RAG represents a significant step in that direction, offering a practical and effective approach to mitigating the risks associated with LLMs and unlocking their full potential. DeepMind’s blog offers further insights into RAG. What are your thoughts on the ethical implications of RAG, particularly regarding the potential for bias in retrieved information? And how do you envision RAG transforming specific industries, such as education or customer service? Share this article with your network and join the conversation in the comments below! Share this:

Contextual AI Frequently Asked Questions (FAQ)

When was Contextual AI founded?

Contextual AI was founded in 2023.

Where is Contextual AI's headquarters?

Contextual AI's headquarters is located at 2570 West El Camino Real, Mountain View.

What is Contextual AI's latest funding round?

Contextual AI's latest funding round is Series A.

How much did Contextual AI raise?

Contextual AI raised a total of $100M.

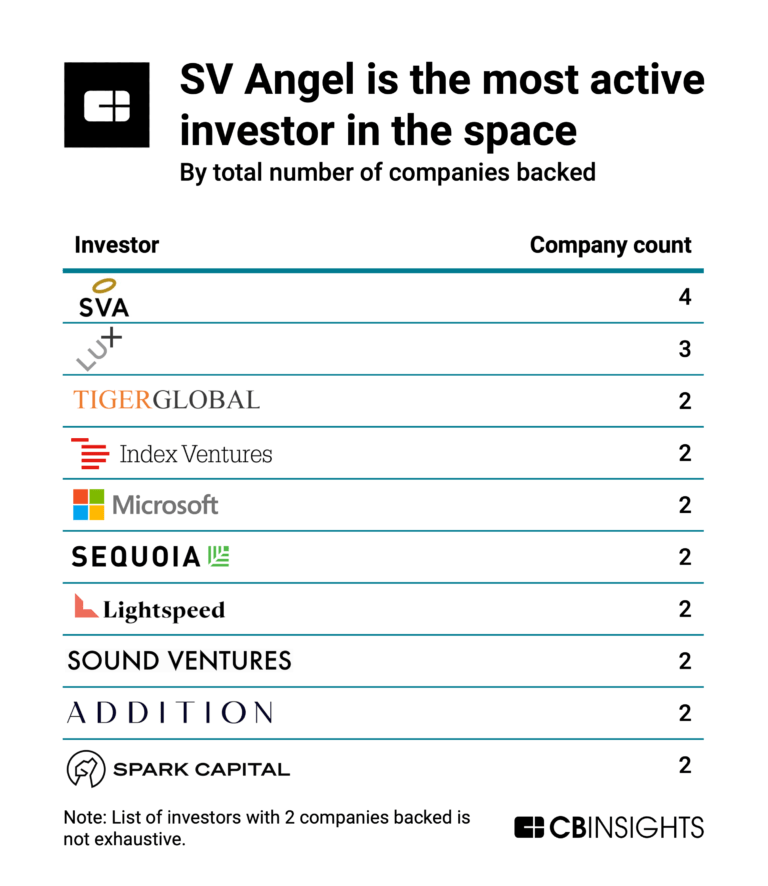

Who are the investors of Contextual AI?

Investors of Contextual AI include Greycroft, Sarah Guo, Bain Capital Ventures, Lightspeed Venture Partners, Lip-Bu Tan and 15 more.

Who are Contextual AI's competitors?

Competitors of Contextual AI include OpenPipe, Glean, 2021.AI, Convergence, Cohere and 7 more.

What products does Contextual AI offer?

Contextual AI's products include Contextual AI Platform and 4 more.

Loading...

Compare Contextual AI to Competitors

01.AI focuses on artificial intelligence (AI) 2.0 technology and applications within the foundation model domain. The company provides products including large language models, enterprise AI solutions, and open-source AI models aimed at improving productivity and supporting businesses. 01.AI primarily serves sectors that require AI-related developments and productivity improvements, such as the technology and business services industries. It was founded in 2023 and is based in Beijing, China.

LlamaIndex specializes in building artificial intelligence knowledge assistants. The company provides a framework and cloud services for developing context-augmented AI agents, which can parse complex documents, configure retrieval-augmented generation (RAG) pipelines, and integrate with various data sources. Its solutions apply to sectors such as finance, manufacturing, and information technology by offering tools for deploying AI agents and managing knowledge. LlamaIndex was formerly known as GPT Index. It was founded in 2023 and is based in Mountain View, California.

MindHYVE.ai is involved in the development of Artificial General Intelligence (AGI), focusing on creating digital employees that operate autonomously across various sectors. The company's main offerings include intelligent agents for healthcare, legal, educational, and financial services, which aim to improve workflows and decision-making. MindHYVE.ai serves industries that require advanced automation and decision-support systems. It was founded in 2022 and is based in Newport Beach, California.

DeepSeek focuses on the development of artificial general intelligence (AGI) within the technology sector. The company offers a platform that facilitates application programming interface (API) calls for integrating AI (artificial intelligence) capabilities and a chat interface for exploring AGI applications. DeepSeek's products are designed to serve as efficient AI assistants for various user needs. It was founded in 2023 and is based in Hangzhou, China.

Allganize provides enterprise AI solutions, focusing on large language model applications across various industries. The company has a platform for building AI-driven business automation tools, integrating natural language processing to support customer inquiries and manage knowledge. Allganize serves sectors such as insurance, finance, and technology with its AI infrastructure. It was founded in 2017 and is based in Houston, Texas.

Aleph Alpha specializes in generative artificial intelligence (AI) technology for enterprises and governments within the artificial intelligence sector. The company offers language models and multimodal AI solutions for human expertise, compliance, and AI applications. Aleph Alpha's technology is tailored for various architectures and value chains. It was founded in 2019 and is based in Heidelberg, Germany.

Loading...