Weaviate

Founded Year

2019Stage

Series B | AliveTotal Raised

$67.6MValuation

$0000Last Raised

$50M | 2 yrs agoRevenue

$0000Mosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+16 points in the past 30 days

About Weaviate

Weaviate is a company that develops artificial intelligence (AI)-native databases within the technology sector. The company provides a cloud-native, open-source vector database to support AI applications. Weaviate's offerings include vector similarity search, hybrid search, and tools for retrieval-augmented generation and feedback loops. Weaviate was formerly known as SeMi Technologies. It was founded in 2019 and is based in Amsterdam, Netherlands.

Loading...

ESPs containing Weaviate

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

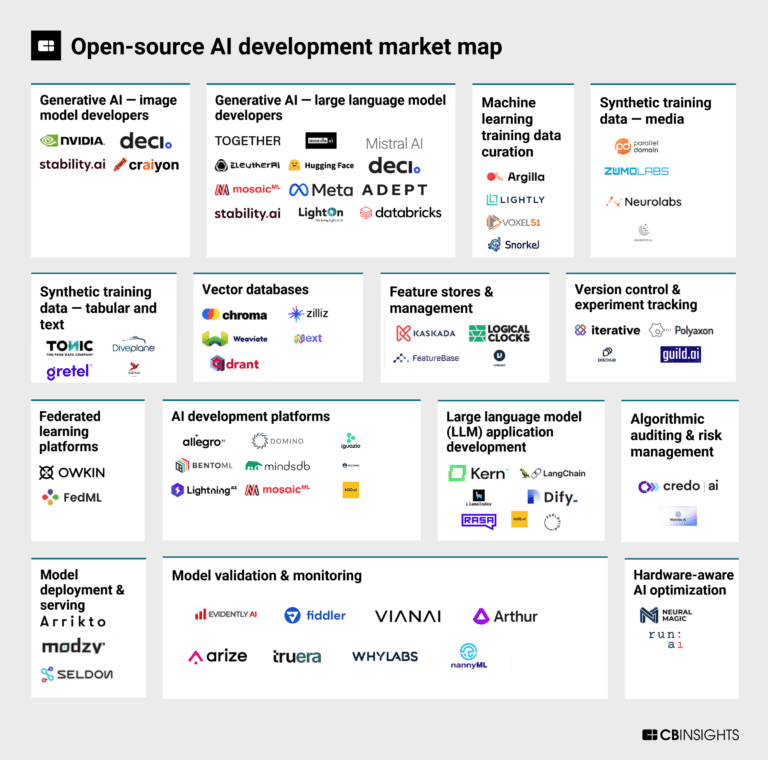

The vector databases market focuses on providing databases optimized for high-dimensional, vector-based data. These databases are designed to efficiently store, manage, and query large volumes of vectors — i.e., mathematical representations of data points in multidimensional space. Vector databases cater to a wide range of applications, including machine learning, natural language processing, rec…

Weaviate named as Leader among 10 other companies, including Oracle, Elastic, and Pinecone.

Weaviate's Products & Differentiators

Weaviate Vector Database / Weaviate Cloud

Weaviate is an open-source vector database that simplifies the development of AI applications. Built-in vector and hybrid search, easy-to-connect machine learning models, and a focus on data privacy enable developers of all levels to build, iterate, and scale AI capabilities faster. Our vector database can be run as a hosted cloud offering or self-hosted OSS deployment.

Loading...

Research containing Weaviate

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Weaviate in 6 CB Insights research briefs, most recently on Sep 5, 2025.

Sep 5, 2025 report

Book of Scouting Reports: The AI Agent Tech Stack

Oct 13, 2023

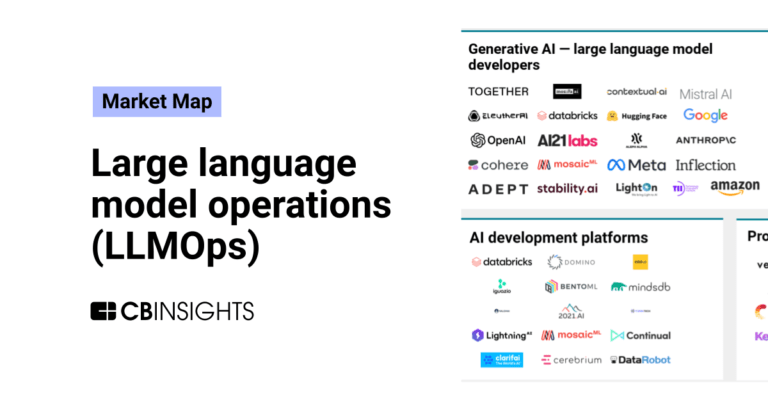

The open-source AI development market mapExpert Collections containing Weaviate

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Weaviate is included in 4 Expert Collections, including Artificial Intelligence.

Artificial Intelligence

12,580 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

AI 100 (All Winners 2018-2025)

100 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

2,793 items

Companies working on generative AI applications and infrastructure.

Latest Weaviate News

Jul 24, 2025

Jul 24, 2025 AI-powered search demands high-performance indexing, low-latency retrieval, and seamless scalability. NVIDIA cuVS brings GPU-accelerated vector search and clustering to developers and data scientists, enabling faster index builds, real-time updates, and efficient large-scale search for applications like retrieval-augmented generation (RAG) , recommendation systems, exploratory data analysis, and anomaly detection. The latest cuVS release introduces optimized indexing algorithms, expanded language support, and deeper integrations with NVIDIA partners such as Meta FAISS and Google Cloud AlloyDB and Vertex AI , as well as Milvus , Apache Lucene , Elasticsearch , OpenSearch , Weaviate , Kinetica , and more. Whether you’re enhancing search in existing vector databases or building custom AI-powered retrieval systems, cuVS provides the speed, flexibility, and ease of integration needed to push performance to the next level. This post introduces the latest features and how they unlock AI-driven search at scale, along with new benchmarks. Building indexes faster on the GPU As the NVIDIA cuVS team strives to provide the most widely used indexing algorithms, DiskANN , and more specifically its graph-based algorithm called Vamana, can now be built on the GPU for a 40x or greater speedup over the CPU . NVIDIA is collaborating with Microsoft to bring the hyperscalable DiskANN/ Vamana algorithm to the GPU for solving important customer use cases. In the quest to make accelerated vector search available to all, NVIDIA is also partnering with hyperscaler transactional databases like Google Cloud and Oracle. Google Cloud AlloyDB is working to bring state-of-the-art index build performance for HNSW with 9x speedup over pgvector on the CPU . Oracle has prototyped cuVS integration with AI Vector Search in Oracle Database 23ai to help accelerate HNSW index builds and is seeing a 5x speedup end-to-end. Weaviate recently integrated cuVS to optimize vector search on the GPU . Initial tests show this integration slashes index build times by 8x using CAGRA, a GPU-native vector indexing method, on GPUs. This provides seamless deployment to CPUs for fast HNSW-powered search. NVIDIA has been working diligently with SearchScale to integrate cuVS into Apache Lucene , a widely used open-source software library for full-text search, to accelerate index builds on the GPU by 40x . Along with Lucene comes Apache Solr with 6x end-to-end index build speedup over CPU . The NVIDIA cuVS team is looking forward to OpenSearch 3.0, which will use cuVS for GPU-accelerated index building , and demonstrate up to 9.4x faster index build times. To round out the Lucene story, an Elasticsearch plugin will bring cuVS capabilities to the entire ecosystem. CPU and GPU interoperability In many RAG workflows, latency for LLM inferencing will swamp latency for vector database querying. However, data volumes are increasing rapidly in such workflows, creating long index build times and increasing costs. At this scale, an ideal solution is to use GPUs for index builds and CPUs for the index search. cuVS offers indexes that are interoperable between GPU and CPU, enabling AI systems to use existing CPU infrastructure for searching while taking advantage of modern GPU infrastructure for index building. Index interoperability offers AI applications faster index build times with potentially lower costs while preserving vector search on a CPU-powered vector database. Figure 1. Indexes built with NVIDIA cuVS can be used on a GPU or CPU search infrastructure This index interoperability between CPU and GPU is one of the most popular features of cuVS. While the library primarily targets GPU-accelerated capabilities, a trend is emerging for building indexes on the GPU and converting them to compatible formats for search with algorithms on the CPU. For example, cuVS can build a CAGRA graph much faster on the GPU and convert it to a proper HNSW graph to search on the CPU. cuVS offers similar support for building the popular DiskANN/Vamana index on the GPU and converting to a format compatible with its CPU counterpart for search. For more direct and comprehensive CPU and GPU interoperability, it’s recommended to leverage cuVS indexes through the Meta FAISS library. FAISS pioneered vector search on the GPU, and the library is now using cuVS to accelerate index builds on the CPU by 12x while accelerating their classical GPU indexes by 8x or more . FAISS recently released new Python packages with cuVS support enabled . Reduced precision, quantization, and improved language support cuVS recently added Rust, Go, and Java APIs, making accelerated vector search even more widely accessible. These new APIs can also be built from the same code base available through GitHub. The team continues to improve these APIs, adding more index types and advanced features like clustering and preprocessing. Binary and scalar quantization are common preprocessing techniques to shrink the footprint of vectors by 4x and 32x, respectively (from 32-bit floating point types). They are both supported in cuVS and demonstrate 4x and 20x performance improvement over the CPU, respectively. When it comes to special-purpose vector databases, Milvus was among the first to adopt cuVS . They continue to improve their support for GPUs with new capabilities, such as the new CAGRA capability to build a graph directly on binary quantized vectors. Accelerating high-throughput search cuVS now offers a first-class dynamic batching API, which can improve latencies for high-volume online search on the GPU by up to 10x . High-volume online search throughput, such as trading and ads serving pipelines, can be improved by 8x or more while maintaining low one-at-a-time latencies using the new CAGRA persistent search feature. Microsoft Advertising is exploring the integration of the cuVS CAGRA search algorithm for this purpose. Thanks to recent improvements to the prefiltering capability of the native GPU-accelerated graph-based CAGRA algorithm, it now achieves high recall even when most of the vectors (99%, for example) have been excluded from the search results. Aside from being a core computation in the CAGRA algorithm, kNN graphs are widely used in data mining. They are core building blocks in manifold learning techniques for reducing dimensionality and clustering techniques for data analysis. Recent updates to the nn-descent nearest neighbors algorithm in cuVS are enabling kNN graphs to be constructed out-of-core. This means that your dataset needs only be confined to the amount of RAM memory in your system. It enables iterative near-real-time exploratory data analysis workflows at massive scale , which is not tractable for the CPU. Get started with NVIDIA cuVS NVIDIA cuVS continues to redefine GPU-accelerated vector search, offering innovations that optimize performance, reduce costs, and streamline AI application development. With new features, expanded language support, and deeper integrations across the AI ecosystem, cuVS empowers organizations to scale their search and retrieval workloads like never before. Get started with the rapidsai / cuvs GitHub library, including end-to-end examples and an automated tuning guide. You can also use cuVS Bench to benchmark and compare ANN search implementations across GPU and CPU environments. cuVS is meant to be used either as a standalone library or through an integration such as FAISS, Milvus, Weaviate, Lucene, Kinetica, and more. Stay tuned for further developments as NVIDIA continues to push the frontier of AI-driven search.

Weaviate Frequently Asked Questions (FAQ)

When was Weaviate founded?

Weaviate was founded in 2019.

Where is Weaviate's headquarters?

Weaviate's headquarters is located at Prinsengracht 769A, Amsterdam.

What is Weaviate's latest funding round?

Weaviate's latest funding round is Series B.

How much did Weaviate raise?

Weaviate raised a total of $67.6M.

Who are the investors of Weaviate?

Investors of Weaviate include Zetta Venture Partners, New Enterprise Associates, Cortical Ventures, ING Ventures, Battery Ventures and 7 more.

Who are Weaviate's competitors?

Competitors of Weaviate include LanceDB, Crunchy Data, DataStax, Vectara, Activeloop and 7 more.

What products does Weaviate offer?

Weaviate's products include Weaviate Vector Database / Weaviate Cloud and 2 more.

Who are Weaviate's customers?

Customers of Weaviate include Morningstar and StackAI.

Loading...

Compare Weaviate to Competitors

Pinecone specializes in vector databases for artificial intelligence applications within the technology sector. The company offers a serverless vector database that enables low-latency search and management of vector embeddings for a variety of AI-driven applications. Pinecone's solutions cater to businesses that require scalable and efficient data retrieval capabilities for applications such as recommendation systems, anomaly detection, and semantic search. Pinecone was formerly known as HyperCube. It was founded in 2019 and is based in New York, New York.

Vespa specializes in data processing and search solutions within the AI and big data sectors. The company offers an open-source search engine and vector database that enables querying, organizing, and inferring over large-scale structured, text, and vector data with low latency. Vespa primarily serves sectors that require scalable search solutions, personalized recommendation systems, and semi-structured data navigation, such as e-commerce and online services. It was founded in 2023 and is based in Trondheim, Norway.

ApertureData operates within the data management infrastructure domains. The company's offerings include a database for multimodal AI that integrates vector search and knowledge graph capabilities for AI application development and data management. ApertureData serves sectors that require AI applications, including generative AI, recommendation systems, and visual data analytics. It was founded in 2018 and is based in Los Gatos, California.

Milvus is an open-source vector database designed for GenAI applications within the technology sector. The database supports searches and can handle large volumes of vectors, suitable for machine learning and deep learning tasks. Milvus serves sectors that require data retrieval and management solutions, such as artificial intelligence and machine learning industries. It was founded in 2019 and is based in Redwood City, California.

Qdrant focuses on providing vector similarity search technology, operating in the artificial intelligence and database sectors. The company offers a vector database and vector search engine, which deploys as an API service to provide a search for the nearest high-dimensional vectors. Its technology allows embeddings or neural network encoders to be turned into applications for matching, searching, recommending, and more. Qdrant primarily serves the artificial intelligence applications industry. It was founded in 2021 and is based in Berlin, Germany.

Turbopuffer is a company that specializes in providing serverless vector database solutions in the technology sector. The company's main service is a vector database built on top of object storage, offering cost-effective, usage-based pricing and scalability. The primary sectors that Turbopuffer caters to include the technology and data storage industries.

Loading...